What is Deep Learning?

Deep Learning is a new area of Machine Learning research, which has been introduced with the objective of moving Machine Learning closer to one of its original goals: Artificial Intelligence.

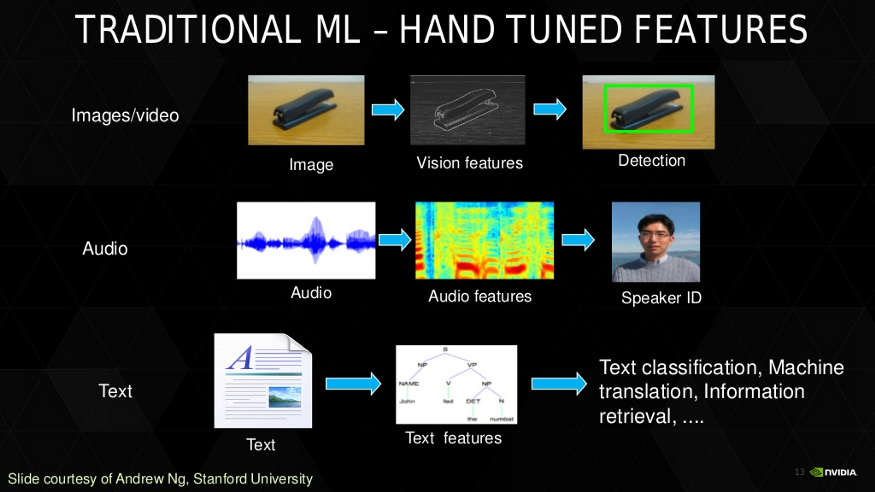

Deep Learning is Hierarchical Feature Learning

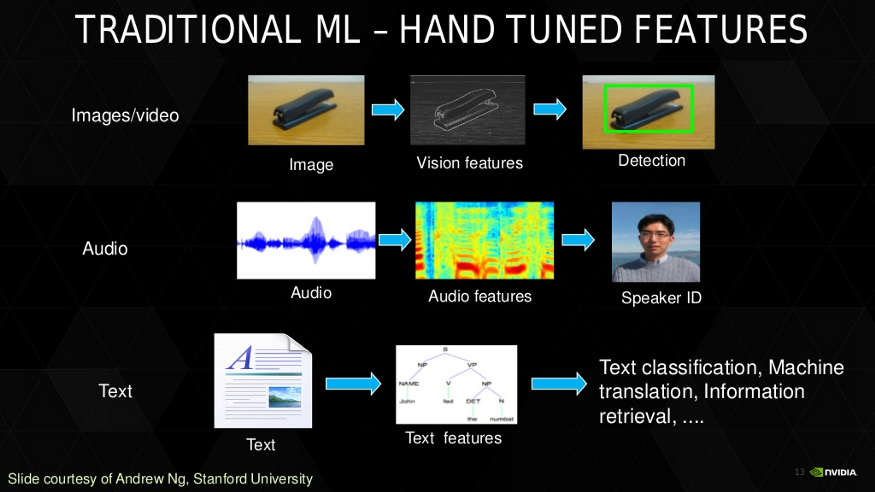

In addition to scalability, another often cited benefit of deep learning models is their ability to perform automatic feature extraction from raw data, also called feature learning.

Why ‘Deep Learning’ is called deep?

It is because of the structure of ANNs. Earlier 40 years back, neural networks were only 2 layers deep as it was not computationally feasible to build larger networks. Now it is common to have neural networks with 10+ layers and even 100+ layer ANNs are being tried upon.

You can essentially stack layers of neurons on top of each other. The lowest layer takes the raw data like images, text, sound, etc. and then each neurons stores some information about the data they encounter. Each neuron in the layer sends information up to the next layers of neurons which learn a more abstract version of the data below it. So the higher you go up, the more abstract features you learn. You can see in the picture below has 5 layers in which 3 are hidden layers.

In deep learning, ANNs are automatically extracting features instead of manual extraction in feature engineering. Take an example of an image as input. Instead of us taking an image and hand compute features like distribution of colors, image histograms, distinct color count, etc., we just have to feed the raw images in ANN. ANNs have already proved their worth in handling images, but now they are being applied to all kinds of other datasets like raw text, numbers etc. This helps the data scientist to concentrate more on building deep learning algorithms.

What is the Most common thing required for deep learning?

DATA, duh?

Soon, feature engineering may turn obsolete but deep learning algorithms will require massive data for feeding into our models. Fortunately, we now have big data sources not available two decades back — facebook, twitter, Wikipedia, project Gutenberg etc.

Now What is an API?

An API is the interface through which you access someone else’s code or through which someone else’s code accesses yours. In effect the public methods and properties.

An example: You are buying an item online through your credit card. You will provide credit card details and press the continue button. It will tell you whether your information is correct or not. To provide these results, there are a lot of things in the background.

The application will send your credit card details to a remote application which will validate your information and send the result back to your application. API is used in this scenario.

I hope it helps beginners who don’t really understand what API is.

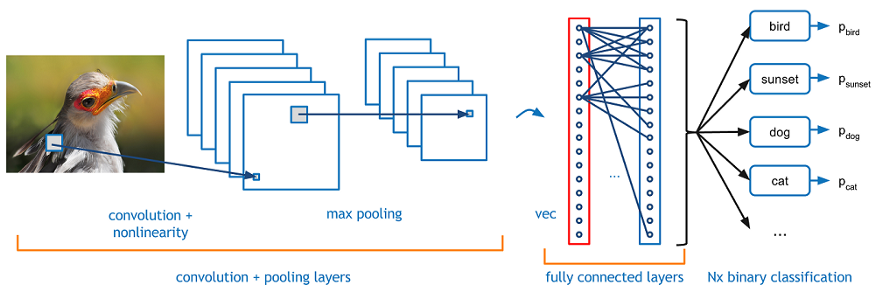

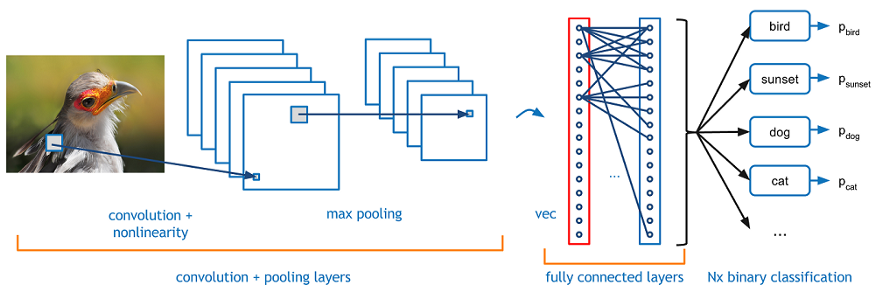

What Is CNN (convolution neural network)?

Convolutional Neural Networks (ConvNets or CNNs) are a category of Neural Networks that have proven very effective in areas such as image recognition and classification. ConvNets have been successful in identifying faces, objects and traffic signs apart from powering vision in robots and self driving cars.

There are four main operations in the ConvNet :

- Convolution

- Non Linearity (ReLU)

- Pooling or Sub Sampling

- Classification (Fully Connected Layer)

A ConvNet architecture is in the simplest case a list of Layers that transform the image volume into an output volume (e.g. holding the class scores)

There are a few distinct types of Layers (e.g. CONV/FC/RELU/POOL are by far the most popular)

Each Layer accepts an input 3D volume and transforms it to an output 3D volume through a differentiable function

Each Layer may or may not have parameters (e.g. CONV/FC do, RELU/POOL don’t)

Each Layer may or may not have additional hyperparameters (e.g. CONV/FC/POOL do, RELU doesn’t)

Requirements

- Python

- Flask (- web framework of Python)

- Keras – Tensorflow as backend

- numpy

- Pillow

Basic Information of Flask

Flask.pocoo.org

from flask import Flask

app = Flask(__name__)

@app.route('/')

def hello():

return "Hello World!"

@app.route('/')

def hello_name(name):

return "Hello {}!".format(name)

if __name__ == '__main__':

app.run()

1. The route() decorator to tell Flask what URL should trigger our function.

2. The function is given a name which is also used to generate URLs for that particular function, and returns the message we want to display in the user’s browser.

we are going to make api with pre-trained VGG models with Flask (keras)

VGG, now what is that?

It usually refers to a deep convolutional network for object recognition developed and trained by Oxford’s renowned Visual Geometry Group (VGG), which achieved very good performance on the ImageNet dataset.

If you see the code it looks something like this :

model = Sequential()

model.add(ZeroPadding2D((1,1),input_shape=(3,224,224))) model.add(Convolution2D(64, 3, 3, activation='relu')) model.add(ZeroPadding2D((1,1)))

model.add(Convolution2D(64, 3, 3, activation='relu')) model.add(MaxPooling2D((2,2), strides=(2,2))) model.add(ZeroPadding2D((1,1)))

model.add(Convolution2D(128, 3, 3, activation='relu'))

model.add(ZeroPadding2D((1,1)))

model.add(Convolution2D(128, 3, 3, activation='relu')) model.add(Dropout(0.5))

model.add(Dense(4096, activation='relu'))

model.add(Dropout(0.5))

model.add(Dense(1000, activation='softmax'))

Example of Full code :-

from keras.applications import VGG19

import PIL

from keras.applications import imagenet_utils

from keras.preprocessing.image import img_to_array

import numpy as np

import argparse,urllib

from PIL import Image, ImageOps

import requests

from keras import backend as K

K.set_image_dim_ordering('tf')

import tensorflow as tf

graph = tf.get_default_graph()

# from werkzeug.utils import secure_filename

import os

from StringIO import StringIO

from flask import Flask, request, redirect, url_for,make_response,jsonify

app=Flask(__name__)

inputShape = (224, 224)

preprocess = imagenet_utils.preprocess_input

# if we are using the InceptionV3 or Xception networks, then we

# need to set the input shape to (299x299) [rather than (224x224)]

# and use a different image processing function

def load_img(path, grayscale=False, target_size=None):

response = requests.get(path)

img = Image.open(StringIO(response.content)).resize((224,224))

print img

if grayscale:

if img.mode != 'L':

img = img.convert('L')

else:

if img.mode != 'RGB':

img = img.convert('RGB')

if target_size:

wh_tuple = (target_size[1], target_size[0])

if img.size != wh_tuple:

img = img.resize(wh_tuple)

return img

def predict(image):

Network = VGG19

model = Network(weights="imagenet")

# image1 = image.resize((224,224))

image1 = image

image1 = img_to_array(image1)

image1 = np.expand_dims(image1, axis=0)

# pre-process the image using the appropriate function based on the

# model that has been loaded (i.e., mean subtraction, scaling, etc.)

image1 = preprocess(image1)

# classify the image

preds = model.predict(image1)

P = imagenet_utils.decode_predictions(preds)

for (i, (imagenetID, label, prob)) in enumerate(P[0]):

print("{}. {}: {:.2f}%".format(i + 1, label, prob * 100))

(imagenetID, label, prob) = P[0][0]

return label

def read_image_from_url(url):

response = requests.get(url, stream=True)

img = Image.open(StringIO(response.content))

img=img.resize((224,224), PIL.Image.ANTIALIAS).convert('RGB')

print img

return img

def read_image_from_ioreader(image_request):

img = Image.open(BytesIO(image_request.read())).convert('RGB')

return img

@app.route('/api/v1/classify_image', methods=['POST'])

def classify_image():

if 'image' in request.files:

print("Image request")

image_request = request.files['image']

img = read_image_from_url(image_request)

elif 'url' in request.json:

print("JSON request: ", request.json)

image_url = request.json['url']

print image_url

img = read_image_from_url(image_url)

else:

abort(BAD_REQUEST)

resp = predict(img)

return make_response(jsonify({'message': resp}), 200)

if __name__ == '__main__':

app.run(debug=True,port=5432)

The jsonify() function in flask returns flask.Response() object that already has the appropriate content-type header ‘application/json’ for use with json responses, whereas the json.dumps() will just return an encoded string, which would require manually adding the mime type header.

Instead of jsonify , we also can use json.dumps().

Python Imaging Library (abbreviated as PIL) (in newer versions known as Pillow) is a free library for the Python programming language that adds support for opening, manipulating, and saving many different image file formats. An RGB image, sometimes referred to as a truecolor image, is stored as an m-by-n-by-3 data array that defines red, green, and blue color components for each individual pixel. RGB images do not use a palette.

Now How to work with python to post requests?

import requests,json

headers = {'Content-type':'application/json'}

imageurl = 'http://pngimg.com/uploads/orange/orange_PNG780.png'

data = {'url':imageurl}

res = requests.post('http://localhost:5432/api/v1/classify_image', data=json.dumps(data), headers=headers)

print(res.text)